Quickstart Guide¶

- go to DEEP Marketplace

- Browse available modules

- Find the module you are interested in and get it

Let’s explore what we can do with it!

Run a module locally¶

Requirements

If GPU support is needed:

- you can install nvidia-docker along with docker, OR

- install udocker instead of docker. udocker is entirely a user tool, i.e. it can be installed and used without any root privileges, e.g. in a user environment at HPC cluster.

N.B.: Starting from version 19.03 docker supports NVIDIA GPUs, i.e. no need for nvidia-docker (see Release notes and moby/moby#38828)

Run the container¶

Run the Docker container directly from Docker Hub:

Via docker command:

$ docker run -ti -p 5000:5000 -p 6006:6006 deephdc/deep-oc-module_of_interestVia udocker:

$ udocker run -p 5000:5000 -p 6006:6006 deephdc/deep-oc-module_of_interestWith GPU support:

$ nvidia-docker run -ti -p 5000:5000 -p 6006:6006 deephdc/deep-oc-module_of_interestIf docker version is 19.03 or above:

$ docker run -ti --gpus all -p 5000:5000 -p 6006:6006 deephdc/deep-oc-module_of_interestVia udocker with GPU support:

$ udocker pull deephdc/deep-oc-module_of_interest $ udocker create --name=module_of_interest deephdc/deep-oc-module_of_interest $ udocker setup --nvidia module_of_interest $ udocker run -p 5000:5000 -p 6006:6006 module_of_interest

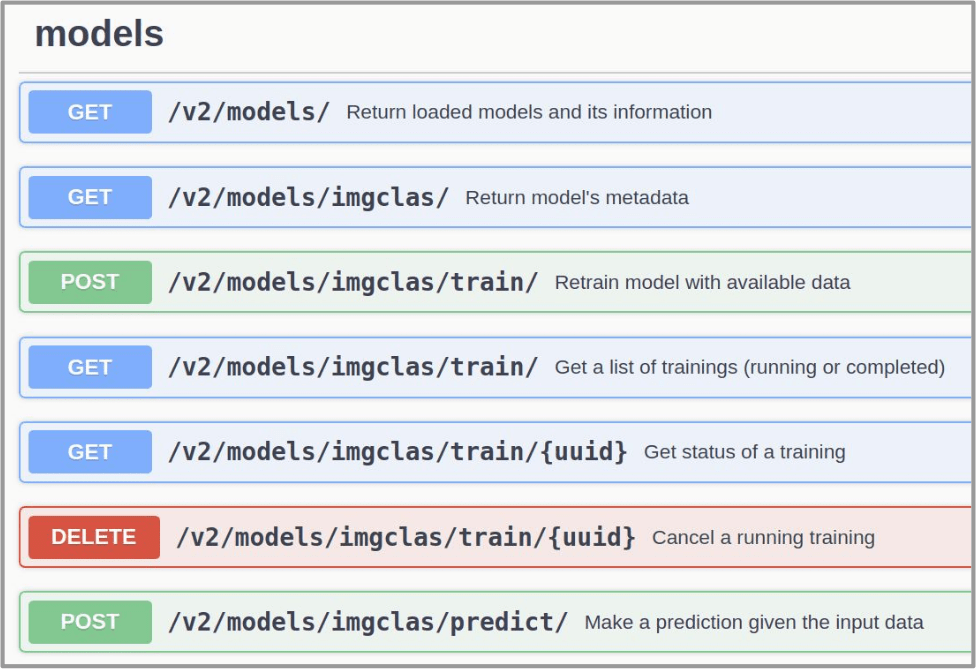

Access the module via API¶

To access the downloaded module via the DEEPaaS API, direct your web browser to http://0.0.0.0:5000/ui. If you are training a model, you can go to http://0.0.0.0:6006 to monitor the training progress (if such monitoring is available for the model).

For more details on particular models, please read the module’s documentation.

Related HowTo’s:

Train a module on DEEP Pilot Infrastructure¶

Requirements

- DEEP-IAM registration

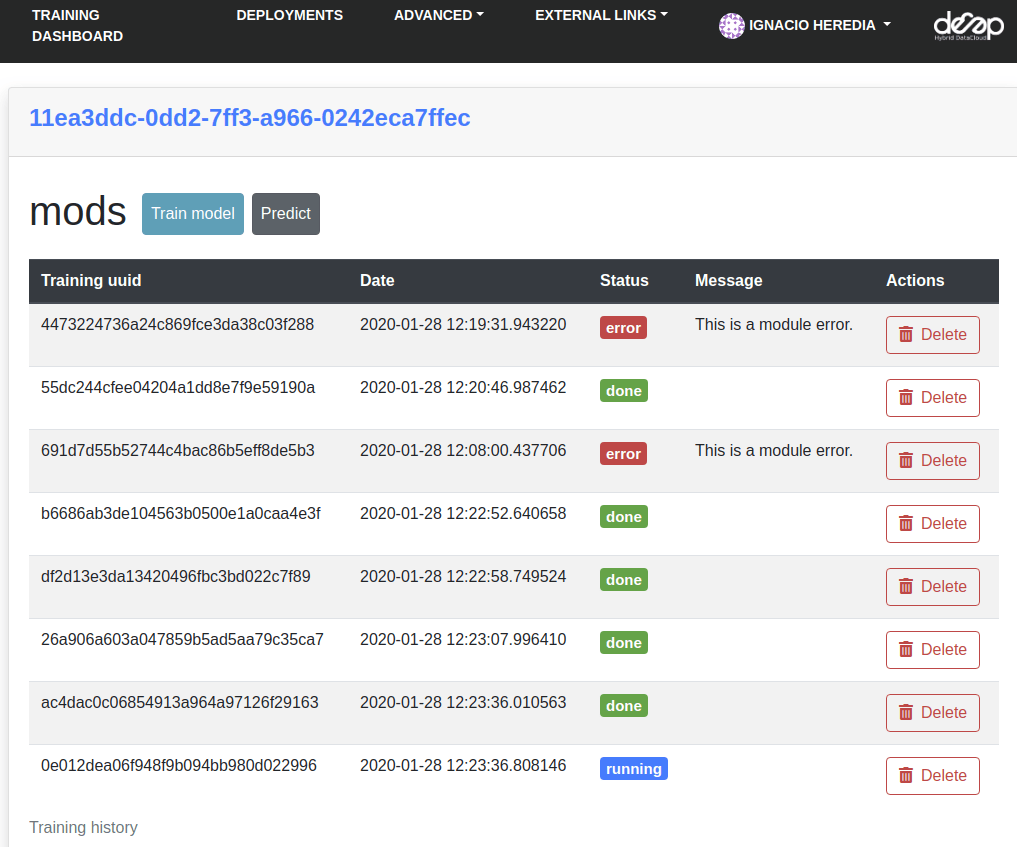

Sometimes running a module locally is not enough as one may need more powerful computing resources (like GPUs) in order to train a module faster. You may request DEEP-IAM registration and then use the DEEP Pilot Infrastructure to deploy a module. For that you can use the DEEP Dashboard. There you select a module you want to run and the computing resources you need. Once you have your module deployed, you will be able to train the module and view the training history:

Related HowTo’s: