Attention

The DEEP platform is sunsetting.

The DEEP-Hybrid-DataCloud project project has ended and its plaftform and software are being decomissioned during 2023, as they have been superseeded by the AI4EOSC platform and the AI4OS software stack.

Please refer to the following links for further information:

Try a service locally

Useful video demos

Requirements

This section requires having docker installed.

Starting from version 19.03 docker supports NVIDIA GPUs (see here and here). If you happen to be using an older version you can give a try to nvidia-docker

If you need to use docker in an environment without root privileges (eg. an HPC cluster) check udocker instead of docker.

1. Choose your module

The first step is to choose a module from the DEEP Open Catalog marketplace. For educational purposes we are going to use a general model to identify images. This will allow us to see the general workflow.

Once we have chosen the model at the DEEP Open Catalog marketplace we will

find that it has an associated docker container in DockerHub. For example, in the

example we are running here, the container would be deephdc/deep-oc-image-classification-tf. This means that to pull the

docker image and run it you should:

$ docker pull deephdc/deep-oc-image-classification-tf

Docker images have usually tags depending on whether they are using master or test and whether they use

cpu or gpu. Tags are usually:

latestorcpu: master + cpugpu: master + gpucpu-test: test + cpugpu-test: test + gpu

So if you wanted to use gpu and the test branch you could run:

$ docker pull deephdc/deep-oc-image-classification-tf:gpu-test

Instead of pulling from Dockerhub you can choose to build the image yourself:

$ git clone https://github.com/deephdc/deep-oc-image-classification-tf

$ cd deep-oc-image-classification-tf

$ docker build -t deephdc/deep-oc-image-classification-tf .

2. Launch the API and predict

Run the container with:

$ docker run -ti -p 5000:5000 -p 6006:6006 -p 8888:8888 deephdc/deep-oc-image-classification-tf

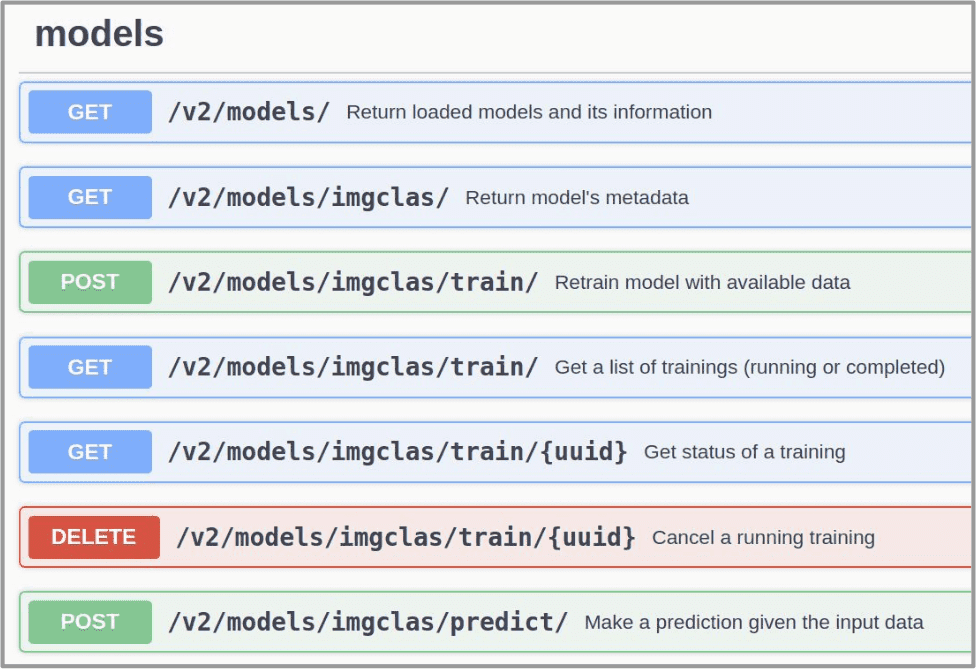

Once running, point your browser to http://127.0.0.1:5000/ui and you will see the API documentation, where you can test the module’s functionality, as well as perform other actions.

Go to the predict() function and upload the file/data you want to predict (in the case of the image classifier

this should be an image file). The appropriate data formats of the files you have to upload are often discussed

in the module’s Marketplace page or in their Github README files.

The response from the predict() function will vary from module to module but usually consists on a JSON dict

with the predictions. For example the image classifier return a list of predicted classes along with predicted accuracy.

Other modules might return files (eg. images, zips, …) instead of a JSON response.